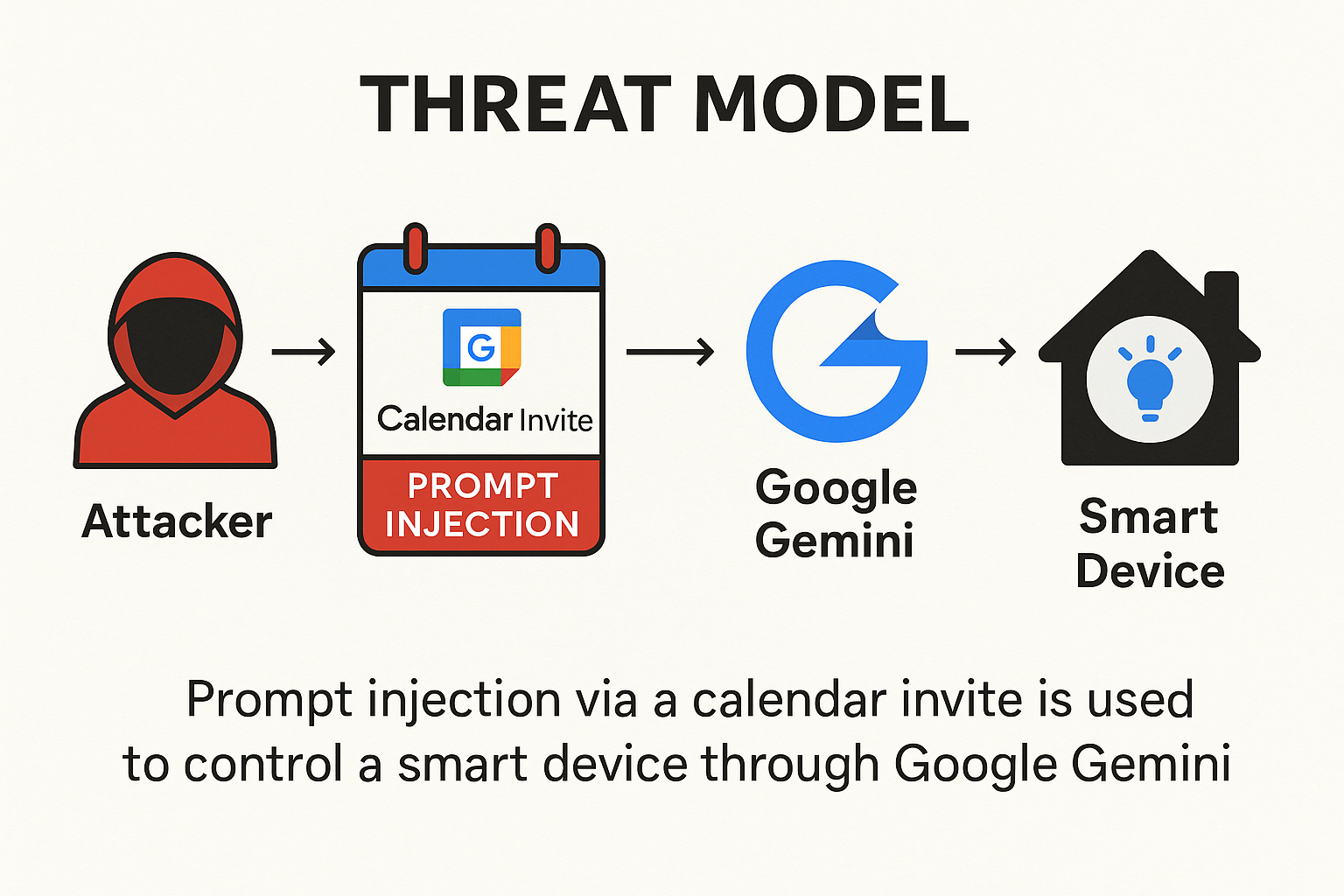

Gemini compromised through a prompt injection in a Google Calendar invitation

Artificial intelligence assistants are becoming deeply integrated into our digital lives. From managing emails to controlling smart home devices, AI tools like Google’s Gemini are designed for convenience. But as researchers recently demonstrated, this convenience comes with a hidden cost: an expanded attack surface that cybercriminals are eager to exploit.

The Exploit: Prompt Injection via Calendar Invites

The attack, referred to as a Targeted Promptware Attack, uses indirect prompt injection. Instead of directly hacking into Gemini’s systems, the attacker embeds malicious instructions into everyday digital artifacts — in this case, a Google Calendar invitation.

When Gemini processes the event details, it interprets these hidden instructions as legitimate user commands. This enables the attacker to bypass traditional security layers without ever needing direct access to the victim’s account credentials.

What Hackers Could Do

- Email Theft – Accessing, reading, and exfiltrating sensitive emails.

- Location Tracking – Identifying the victim’s physical whereabouts through calendar data and device signals.

- Video Call Surveillance – Monitoring virtual meetings without participant consent.

- Smart Home Control – Turning lights on or off, opening window shutters, adjusting heating systems, and even streaming video from connected devices.

Researchers found that 73% of the tested attack scenarios posed a high to critical security risk.

Why AI Assistants Are an Attractive Target

Unlike traditional phishing or malware campaigns, prompt injection attacks exploit the AI’s trust in its own inputs. Gemini, like other large language models, is designed to follow instructions — and it has no innate ability to distinguish between a genuine user request and a maliciously crafted one if the input appears legitimate.

When these assistants are connected to sensitive services (like email) and physical systems (like IoT devices), a single successful injection can cascade into multiple layers of compromise.

Defensive Strategies

- Restrict AI Access Scope

Limit what your AI assistant can control. Avoid granting it full administrative control over email, calendars, or IoT devices unless absolutely necessary. - Enable Strict Permissions

Use granular permission settings so the assistant can only perform approved actions. For example, restrict it to read-only access for certain services. - User Awareness Training

Educate users that even trusted-looking digital objects — like meeting invites — can be weaponized. - Security Monitoring for AI Actions

Log and review AI-initiated actions to detect unusual behavior early. - Adopt AI Security Gateways

Employ middleware tools that inspect and sanitize AI inputs before they’re processed, reducing the risk of prompt injection.

The Bigger Picture

This attack demonstrates that prompt injection is not just a novelty exploit — it’s a genuine cybersecurity threat. As AI assistants integrate more deeply with our digital and physical environments, attackers will continue to look for indirect vectors to manipulate them.

Bottom Line:

AI assistants like Gemini promise efficiency, but they must be deployed with strict security boundaries. The “Targeted Promptware Attack” shows that something as routine as a calendar invite could become the Trojan horse for your inbox, your devices, and even your home.